Why Validated AI Never Reaches Patients: Regulation Gap

NeuroEdge Nexus — Season 1, Week 3 (October 2025) PART 1

Validation without pathways to clinical adoption is like designing a bridge that nobody can cross.

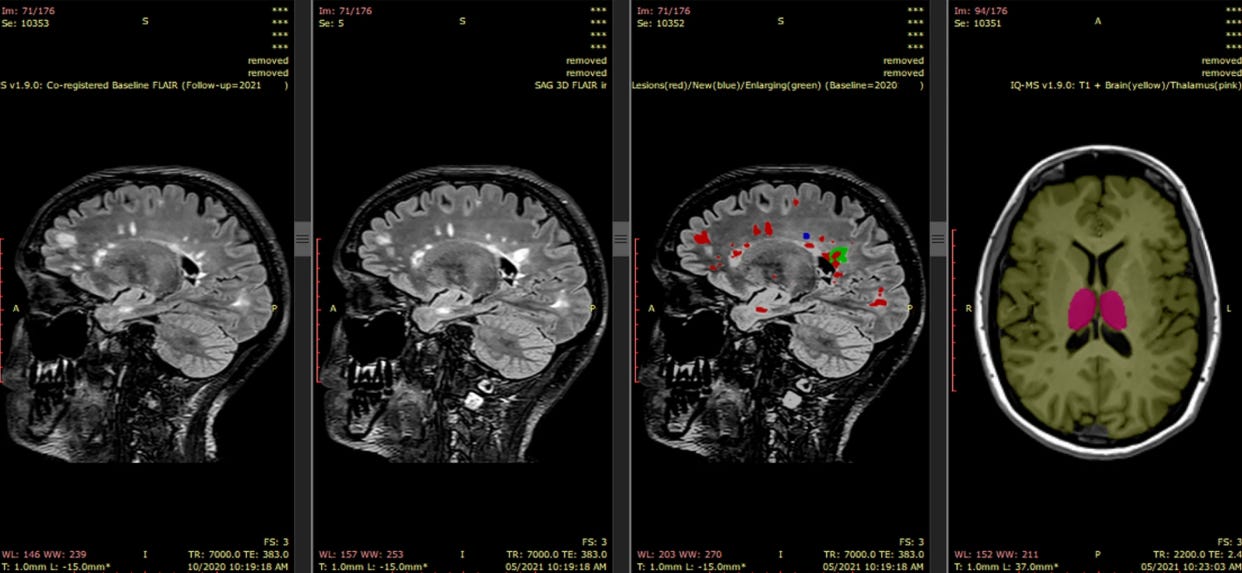

Barnett M et al. A real-world clinical validation for AI-based MRI monitoring in multiple sclerosis. NPJ Digit Med. 2023;6:196.

PART 1

Between 2019 and 2022, a large academic medical center in the Netherlands spent three years attempting to implement artificial intelligence across its radiology department. What they discovered reveals why even validated algorithms struggle to reach clinical practice—and why regulatory frameworks, not technical performance, determine whether AI helps patients.

Last week, we discussed AI fundamentals and infrastructure challenges. This week, we examine the regulatory and organizational frameworks that determine whether AI reaches clinical practice—and what institutions learned by navigating these barriers for three years.

The Reality Check

A comprehensive longitudinal study published in Insights into Imaging (2024) documented the real-world challenges of implementing AI in clinical radiology at a major European academic medical center. Over three years, researchers conducted 43 days of work observations, 30 meeting observations, 18 interviews, and analyzed 41 documents to understand what actually happens when hospitals try to deploy AI tools. A holistic approach to implementing artificial intelligence in radiology | Insights into Imaging | Full Text The findings were instructive: before establishing proper infrastructure, implementing a single AI application took 18-24 months, with the majority of that time consumed by legal documentation, regulatory compliance, contractual negotiations, and data governance frameworks—not algorithm validation or clinical testing. A holistic approach to implementing artificial intelligence in radiology | Insights into Imaging | Full Text This was not a failure of artificial intelligence. This was a failure of regulatory and organizational systems to keep pace with technological capability.

The institution eventually succeeded by building what they called a “holistic approach”—addressing regulatory compliance, data sovereignty, technology infrastructure, workflow integration, and organizational culture simultaneously rather than treating AI deployment as a purely technical problem. A holistic approach to implementing artificial intelligence in radiology | Insights into Imaging | Full Text Their experience offers critical lessons for neurology, where similar AI tools promise significant clinical value yet face identical regulatory and governance barriers.